Continuing with me sharing my experiences as CTO, in this post I share the actions I took to help improve an engineering organisation's operational health in our journey of scaling an online video streaming platform from 1X to 10X, from May 2017 to October 2020. To get to 10X improvement takes a journey, which I achieved in under 3 years, and after reaching the goal, I decided I'd learnt enough of the CTO experience and exited, after having set up a strong succession leadership pipeline in place.

To get an idea of some of the major themes that I tackled during this time, as a leader I had to lead from the front, back, left, right setting the direction of my managers to follow (as all of these interventions were new to them), whilst doing my best to respect what came before:

- Establishing the team despite constant re-orgs going on at parent company - getting the right people in the right roles at the right time

- Transforming a rag-tag undisciplined team to a disciplined, clear-headed, focused organised unit

- Introducing laser focus on product engineering by unbundling non-core video apps to other businesses

- Being critical on the technology platform by establishing a baseline of the architecture, using third party auditors to rate the scalability of the platform

- Improving physical infrastructure: networking, compute, storage and data centres. Move away from self-hosted and self managed data centres to partnering, shutting down data centres as needed.

- Build an industrial grade networking stack and leveraging modern peering facilities and overhauling the server infrastructure

- Setting the roadmap for cloud by transitioning first from single region data centres, to multiple data centre deployments, to running multiple stacks simultaneously, introducing containers and microservices then finally getting ready for cloud and leaping first into serverless paradigms

- Embracing cloud partnerships with big players: Akamai, Microsoft, AWS, etc.

- Improving product and engineering delivery by revamping and overhauling the agile work processes and backlog management.

- Introducing communications mechanisms that helped remove doubt and earned trust across the many different business units and teams (we were known as the online pirates doing their own thing)

- Improving risk, governance and security - bringing it to the top, raising awareness

- Creating strategic partnerships internally and externally to leverage skills and expertise I couldn't get in-house or afford to build or manage ourselves

- Introduced technical operations controls - Mission Control, more active management of operations daily, 24/7 with increased focus, planning and prep for peak times, like weekends and major events planning.

- Aggressively reducing costs on key platform components whilst capitalising on gains through economy of scale

In this post, I'm going to share some of the early context and interventions I introduced in my first 3 months on the job, that remain effective to this day, more than five years later: Mission Control Ops.

The dreaded 403 We're sorry, something went wrong

I took over a team that weren't prepared for the intense discipline needed to run and operate a highly available 24/7/365 platform. There were many reasons for this which I might touch on in another most. I recall coining the term "Bloody May" as the month of so many outages, that I wondered: 1\ What on earth have I taken on? 2\ Is my life going to be consumed by work from now on? 3\ Is there any hope for this platform? 4\ How am I going to turn this platform around? 5\ How much is the job worth to me?

It turned out there was going to be many more "Bloody Mays"in 2017 whilst my team set about improving stability. In 2017, the platform experienced outages that racked up about 20 days of downtime in one year. This equates to ± 95% availability, which is unacceptable for a video streaming platform. When I left the team in Oct 2020, we had turned around the platform to reaching 99.5% availability trending higher. Today, 5 years on, I'm told the availability is much higher but their usage profile has drastically changed (reduced the number of concurrent streams to one device only, also reduced their devices supported, moved most of the services to AWS).

Almost every weekend, the platform experienced some outage. Sometimes, the entire weekend. During those outages, the engineering and platform operations teams lacked a methodical way of combing through the issues, debugging and root causing. There also wasn't a clear owner owning the recovery efforts, or communicating with stakeholders (that was going to be part of my job from then until I found a technical operations person to take over).

Remember my context: I'm owning an online video platform that supposed to provide near real-time live video streaming. Our product was used mostly during weekends or whenever there was hype around some sports event (Rugby, Football, UFC, F1) or a release of a popular show premiere (Game of Thrones, etc). So all it took was one customer's twitter rant about a 403 error that caused my phone to ring, at times getting the same message of concern from 5 different CEOs! Not from one country, South Africa, but we operated across 50 countries on the African continent. So escalations was rampant as the company's reputation was on the line. Known for its reliable satellite infrastructure, the leaders held a very high bar for their fledgling online video team.

Alas, this online video team, was still a fairly young start-up, and didn't fully appreciate the high expectations. After-all, if the user waits a while and refreshes, the error would likely go away! Historically, this engineering team would not disrupt their weekends to tend to the issues, unless it was a complete outage, an attitude I would soon change through the introduction of this concept I called Mission Control.

High Ops load impacted my work/life balance & finances

Another driver that added further impetus to fixing these operational support challenges was the impact on my personal time and financial earnings in my first year. In 2017, I was still consulting taking on the role as interim CTO, which meant I was billing by the hour that was capped to a monthly rate of 180 hours. Take a look at the profile of my work hours during my 3.5 years tenure leading this engineering team:

My hours in 2017 increased by:± 34% compared to 2016, working an extra 567 hours!

And since my hours were capped to 180 hours / month, I was missing out on being paid for 341 hours of extra work, just over R600k of lost billings in 2017!! Not a good deal, right? Compared to my consulting life from previous years, my hours were comfortable, earnings good and I was never paged for platform outages - what in the world did I get myself into!! Interesting that back then I prioritised learning more than money, even today, as I work at Amazon, I've prioritised my own learning over salary (as I earn less now than what I did as CTO back in 2017-2020), writing this post in 2023. I guess this is who I am, even back when I switched just before promo to a senior engineer to being a junior engineer in another domain, I valued learning over job title and pay.

Mission Control Ops - the culmination of many small improvements over time

|

| Starting Mission Control |

Also, coming from the set top box world of broadcast satellite, technical performance and network operations was something I had first hand experience. So I knew from the start, to get to the end goal was going to be a marathon, not a sprint. And that I had to lead from the frontlines, setting the tempo, templates and communication mechanisms to bring people along. I knew I could not lecture from a pedestal and had to meet the team where the were, an effect change through coaching. This meant that I had to allow the team to experience some emotional pain as a result, as leaders often have to do - i.e. promote learning from mistakes.

Here are just some of the techniques that led to my introducing the Mission Control system that remains effective to this day, even after the teams and businesses were later re-organised:

- Start with small habits before introducing systems & automations. The first intervention was building a spirit of ownership. I introduced weekend health readiness checks. On a Friday we will test and report on the state of the platforms entering the weekend. On the Monday, we provide a report of what happened over the weekend. I created a checklist for the engineering managers to use. Every Friday an email update would be sent to stakeholders on the fixes that went in from Mon-Thursday (as a result of weekend issues), summary of the events/issues and outlook for the weekend. The goal being that every weekend we need to be ready and in shape for weekend's load. Whatever issues were picked up over the weekend, teams must commit to delivering fixes in time for the next weekend.

- Introduced Runbooks - manual checklists along with timely checkins. In the absence of automation, engineers were to check-in regularly on email and whatsapp groups, posting the status of all their services - key metrics at the time, absence of metrics tooling - relied on typical network, CPU and database monitoring. Support engineers would rely on manual testing and exercising key customer use cases by using the app. I'll get a report and update of progress. I would also be feeding back my experiences.

- Obsessed on customer sentiments - monitoring social media. I introduced activities for engineers to regularly track social media feeds complaining about the streaming experience and acting on them. We couldn't talk directly to the customer and had to engage via the business support channels, so I created a partnership between my engineers and the business support channels. Eventually, the business support channels partnered closely with my org for monitoring customers and key events.

- Embracing test automation canaries in production. One of the first engineering changes I made was revamping the testing family by focusing strongly on automated test environments. I had good strong technical test leads who led sterling efforts and introducing automated API tests, testing key service in production and providing realtime dashboards of the test case suite results. Now the support engineers had access to realtime dashboards from canary tests, they could easily tell when services were failing to page in the service team developers. In the first year, this was still manual but a big step in the right direction. My test lead won the Naspers innovation award as a result of the innovation we delivered in test automation. We invested in real "robots" WitBe test agents that drove and reported on customer experience tests.

- Invested in FullCI/CD from day one. When I joined the engineering team had a fledgling build system running on a single machine, Jenkins build machine. In the first year we enhanced the backend services to fullCD pipelines and expanded the build infrastructure. By the second year we had fullCD for all our frontend applications across all platforms: multiple web browsers, iOS, Android, SmartTV, connected STBs and 3rd party media devices. With a fully automated software release pipeline along with automated testing on production, the quality of engineering and confidence in the releases improved drastrically.

- Adapting to corporate controls: CAB Change Advisory Board. As I was a CTO for one line of business, we needed to adapt our processes to align with "Enterprise Change Control Boards" without losing the agility we needed to deploy or rollback quickly, and the flexibility to load test on production. Because of our mission control process and our automated build/release pipelines, we secured just enough compliance to keep our online delivery team further separated from traditional heavyweight IT change controls. My tech ops manager took over communicating with the CAB and owning the standard ITIL/COBiT compliance checks.

- Made software engineers more accountable for operations monitoring. Whilst the team did have a crude concept of "on-call", they called it "standby" - the standby engineers lacked any real runbook or SOP on checking their services. I got them to contribute to part of the check-in reporting schedule. If you're an engineer on standby, in the absence of tooling and automated alarming, you need to provide me an update on the health of your services according to the schedule. You're getting paid overtime for standby, so you need to earn it - standby is not about being reactive and waiting for an outage to happen then act?! No, that was the old mindset, and I had to change it. I was quite unpopular, but it happened alright.

- Andon chord when there was an outage. Whenever there was an outage, I would call every one onsite immediately (key engineers on standy, their managers and my senior managers, along with the platform infrastructure time). In the early days, it was chaotic - there was no runbooks, one senior engineer would be the hero and try to diagnose and save the day! No more, I facilitated the investigations, asked questions - got people to think methodically. We created a board that showed how we restart the services, sequences and steps to get the stack to bootup again. This became a useful runbook because in 2017, we would spend time rebooting the stack a good few times. Every issue had an action item to follow-up and plug the holes in time for the next weekend.

- Retrospectives after an Andon chord event. After an all nighter slog to address outages, even if it was 2am in the morning, I never let the team go home without having an instant retrospective. I wanted people to share their feelings, their experience of the events. I know that change has to strike an emotional chord, otherwise people will not strive to improve their systems. Sometimes these check-ins on feelings took an hour, but it was effective as it got the team to gel more closely together and feel strongly connected as one team. Despite the high egos and emotional outbursts (sometimes I myself was guilty of), people always reconciled and left with a better appreciation of what platform stability means, what structure and discipline is required to keep a tech platform running smoothly and the stakes at hand. I got good feedback on these emotional check-ins...

- Introduced key (near real-time) metrics to track. If you can't see the target how are you going to hit it? Apart from the standard CPU/Memory/Network stats, this engineering team did not think from the customer's perspective. So I introduced key metrics that our operations monitoring team and standby engineers must monitor and report on during every check-in. Things like, number of connected users streaming right now, traffic through load balancer, cache hits / misses, playback issues, test results, etc. At first I created a table on a whiteboard, which became the key information status radiator before we built automated platform intelligence dashboards. I also introduced technical metrics publishing to the real-time business metrics dashboards, so everyone could see in near real-time, the current performance of the stack in terms of key customer, business and technical metrics.

- Hired a Technical Operations Manager. Whilst leading these initiatives myself personally, I was grooming another manager to take over technical operations, following my lead. Eventually this manager would take over and repeat my mechanisms like clockwork and also introduced his own enhancements, and built out a stronger technical support team. Previously the support engineers didn't understand the technical stack, architecture or APIs - so we worked on closing that gap by investing in training and technical development.

- Controlling communications to stakeholders. I initially protected the team from the heat of various stakeholders. We had a number of outages at the most unfortunate of timing, CEOs would come crashing down - some risk officers often asked me "Who should we fire? Who's responsible for the outage? Mo, do you have the right people?". I had shouldered this burden from the team and took all the risk on - explaining the nature of the platform and showing the lessons we learnt as a result and proving all the work that went into addressing fixes. Eventually my tech ops manager stepped up to handle stakeholders comms and integrate with the wider enterprise's incident management processes.

- Introduced the Five Whys. For every outage, I drove the process of the five whys to explain the outages in simple english to my stakeholders and also to teach my engineers how to dive deep and address the root cause. I insisted on the highest standards that we're not learning or diving deep enough if we're experiencing outages for the same reasons over and over. A way to manage this was through constructive feedback and to the point of performance management at engineer level.

- Created Platform Intelligence Dashboards. We lacked a single pane of glass for monitoring operations so I funded a start-up team to build a platform to onboard multiple dashboards from various data sources. Any team with a dashboard providing useful insights on the health of the platform could now be onboarded. The dashboard was super cool and useful for operations engineers and also myself, to instantly inspect the health of the platform, near real-time.

- Automated Repetitive Tasks. I inspected the support monitoring engineers daily activities, checklists and action items, helped them identify patterns of repeated manual work and recovery steps, then helped them automate processes. I connected them closer to the development teams so that their pain-points could be addressed in weekly sprints, improving overall monitoring health week on week. Over time, these habits started to stick and brought the teams closer to a devops mentality.

- Invested in third-party monitoring, alarming and insights systems. At the service team level, our engineers were flying blind when it came to debugging and triaging issues, as well as monitoring events and transactions in real-time. Instead of reinventing observability into our system, I approved the purchase of third-party software like App Dynamics. In a short space of time, the engineers were no longer flying blind and could visualise the flow of information through their APIs, access logging and other traceability tools that saved precious time in our reaction time.

- Active planning around events - the War Room. I showed my team how to plan in advance and be on top of the key events. I created the networks and partner touch points across digital comms, social media channels, customer care, command centres internally and external partners - putting down a template for event planning that my tech ops manager eventually took over and ran, owning all the communications thereafter. I showed how we could leverage our partners operations monitoring teams like Akamai, Irdeto, AWS for supplementing our own monitoring for key events.

- Use metaphors like ICU, High Care, Drain the Swamp. I was vocally self critical and transparent with my stakeholders from day one. They gave me this job to take on a technical platform that wasn't initially built for scale. They were a neglected team without a big budget and hence they built a broad digital platform (somewhat ingeniously) in the face of extreme frugality on a shoestring budget. So when the business decided to aggressively market online video streaming and grow their user base, they had no idea about the amount of technical debt or the state of the platform. So I used language like "I need to drain the swamp to see what rocks and other monsters lie beneath the stagnant waters. And to do this, I need to load test on production!" Or "The system is in ICU with key vitals operating at elevated rates. We are monitoring round the clock and have countermeasures, but this is the best we can do right now because our infrastructure can't cope with the current load. I'm sorry.".

Mission Control as a culture of behaviours indexing highly on ownership

|

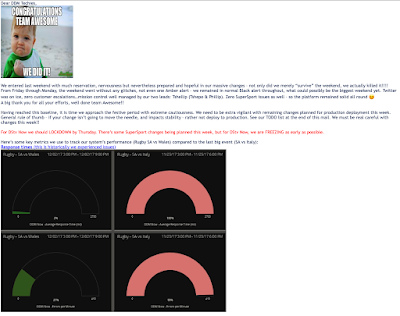

| Thank you email |

Striving for engineering operational excellence, what higher bar than NASA to use as an aspiration. Improving an engineering teams operational excellence starting from zero and getting to ninety (when I left), was not easy.

It called for a lot of leading from the frontlines, getting my hands dirty, influencing and convincing people of the responsibilities on high engineering standards - showing my managers and engineers how to go about planning and reacting to crisis situations.

I'm not saying I got it right all the time. I am only human and subject to my own biases and emotional challenges. I was initially swayed by some of the biases from senior stakeholders, sometimes the pressure and intensity of their impatience, leaked from me onto the team. Over time, I built enough of a thick skin and backbone to know both when to buffer the team and when to allow pokes to the team, especially when an outage was a result of our own negligence or oversight (or over confidence). We shared many late nights, early mornings and all nighters together, some hits and misses - but in the end, Mission Control was pivotal in improving the operational health and stability of the platform. I should also mention the importance of communicating with the teams at all levels. Introducing a big change in engineering excellence is not easy, so communication is vital not only to keep the story together but most importantly to bring your people with you along the journey in executing your vision as an leader.

|

| Motivating the team |

No comments:

Post a Comment