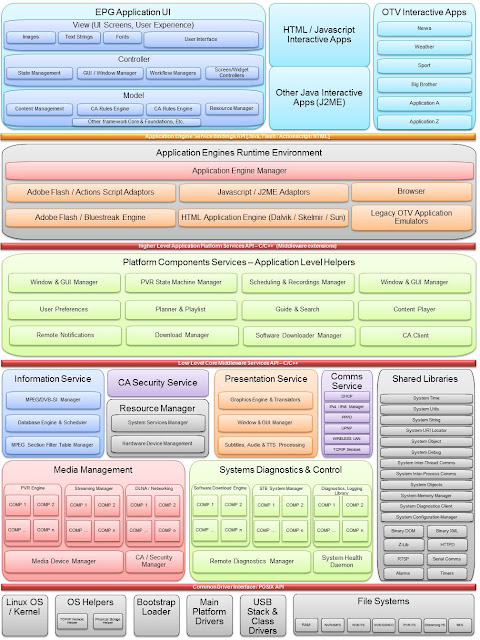

To appreciate why we ended up with a very controlled Development & Integration process, one has to first understand the type of software stack we were building. I've searched high and low to find public references to the Middleware Stack we created, but unfortunately, this is either not publicly available or very hard to find. So I've reconstructed the architecture model from memory (I made it a point to by-heart the architecture to help me better manager the project, but I was also exposed to the architecture in its fledgling design stage as I hacked some POCs together) as I understood it at the time (I plan to expand on the architecture model in a future post, but this will have to do for now) shown below:

Take for example, the Information Services group. This component-group logically implements the STB's ability to parse the broadcast stream, populate an event and service information database, maintain updates to the database as the broadcast stream is required, and provide an information services interface to upwards and sidewards components that depend on this API. An example of an upwards API is the User Interface (EPG) that needs to display the information about a program currently being played, the actor details and a 3 or 4 line description of the program itself (called the Event synopsis). A sidewards component, say the PVR manager needs to know when a program's start & end time is to trigger a recording.

The same can be said for a PVR or Media Streaming logical area - which is by the most complicated piece of the software stack. The PVR engine consists of internal components, not all of them can be independently tested since a device pipeline is required. So the PVR engine will export its own test harness that tests the group, and expose the API required to exercise it.

Thus the Middleware stack is a complex mixture of an array of components. Development and Integration therefore was a closely coupled activity, although implemented by two very separate teams: a Development team and an Integration team.

The Development team typically owns a Logical group of components and was split between the different countries UK, Israel, France & India based on the core strengths of the teams. Remember this was our sixth or seventh evolution of the Middleware product, with each country owning a certain level of speciality and competence. Each country had effectively either written Middleware products before, or major components there-of, so organisationally the ownership for the Middleware stack had to be spread across the various countries, but maintaining a focus to leverage on the key competencies of the various regions.

The Integration team were no different to the Development team, apart from not owning components, but instead owned the Middleware Test Harness software stack - this in itself can be considered a product in its own right. The integration team were basically made up of software engineers, who had to understand the component APIs down to the code level, and also were required to debug component code. Integration therefore was a low-level technical activity, ensuring that test cases were applied as close as possible to realise customer user experiences by driving through the component APIs. The Middleware Test Harness (MTH) tested both depth and breadth of middleware functionality by using the published APIs through executing test cases - all in the absence of the EPG. This was truly component-based testing. Once confidence was achieved through the Middleware Test Harness, we were more confident that implementing client application with the Middleware would be relatively painless, since the functionality had already be exercised through the MTH. Suffice to say, we had to rely heavily on automated testing, which I'll cover in the section on Continuous Integration & Testing.

By now you should realise that this process called for very solid Configuration Management practices. We had settled for

Rational ClearCase as the tool of choice, and adopted much of the

Rational UCM terminology for Software Configuration management. The actual implementation of ClearCase was the distributed model where we had servers distributed in each location, so called "Local Masters" in two sites within the UK, one in Paris, Jerusalem and two sites in Bangalore, whilst the central "Grand Master" site located in UK. With the

multi-site version suited for globally distributed development we had to contend with synchronisation and replication challenges as with any distributed system, and the constant challenge of aligning time zones. Suffice to say, we had a separate, globally distributed team just for managing the ClearCase infrastructure-and-administration alone.

If I get a chance some time, I'll try to dig into the details of this particular type of Configuration Management (CM) System, but there are plenty of examples

online anyway....but for the purposes of this post, I'll have to define some

basic terms that I'll use throughout this post on taking you through the Work Package Workflow. Note this is as I understood them at 50000 feet so please forgive my deviations from

pukka UCM terminology! (

Click here for full glossary on IBM site):

- Stream - Basically a branch for individual development / integration work, automatic inheritance of other streams

- Baseline - A stable, immutable collection of streams, or versions of a stream

- Project - Container for development & integration streams for a closely related development/integration activity

- Rebase - Merge or synchronisation or touch point with latest changes from a chosen baseline

- Delivery - The result of merging or pushing any changes from one stream into another stream

- Tip - The latest up-to-date status of the code/baseline from a particular stream

At the highest level, we have a Configuration Management (CM) system that implemented Rational's

UCM process. We had a CM team that defined the architecture and structure for the various projects, basically everyone had to adopt the CM's team's policy (naming conventions, project structure, release & delivery processes). Our project was under full control of the Middleware Integration team. This team defined the rules for component development, integration and delivery. Whilst component development teams had freedom to maintain their own component projects under CM, they had to satisfy the CM and Integration rules for the project - and this was controlled by the Integration team.

Integration therefore maintained the master deliverable for the product. This was referred to as the Grand Master, or Golden Trunk or Golden Tree. Integration was responsible for product delivery. Integration owned the work package delivery. Integration was therefore King, the Gate Keeper, the Build Master, nothing could get past Integration! This was in keeping with best practices of strong configuration management and quality software delivery. The primary reason for this strong Integration process was to maintain a firm control of Regression. We focused more on preventing regression over delivering functionality, in some cases we had to revert code deliveries of new features, just to maintain an acceptable level of regression. Integration actually built the product from source code; so much so that Integration controlled the build system, makefile architecture for the entire product. Development component owners had to just fall in line. Rightly so, we had a core group of about four people responsible for maintaining the various build trees at major component levels: Drivers, Middleware, UI & full stack builds. Components would deliver their source tree from their Component streams into Integration stream. Integration also maintained a test harness that exercised component code directly. This build and configuration management system was tightly integrated with a Continuous Integration (CI) system that not only triggered the automatic build process but also triggered runs of automated testing, tracking regressions and also went to the extent of auditing the check-ins from various component development streams, integration streams, looking for missed defects, as well as defects that went in that wasn't really approved by Integration.....gasp for breadth here....the ultimate big brother maintaining the eye on quality deliveries.

In essence, we implemented

Promotional-based pattern of SCM.

Sample of References on SCM practices supporting our choice of best practice SCMThe figure below is a high level illustration of what I described earlier. It highlights the distributed model of CM, illustrating the concepts of maintaining separation of the Grand Master (GM) from localized Development/Integration streams, showing also the concept of isolated Work Package (WP) streams. Summarizing again: Component Development and Delivery was isolated to separate WP streams. The WP stream was controlled by Integration. Depending on where the bulk of the WP was implemented, since WPs were distributed by Region, the WP would deliver into the local master. From the local master, subject to a number of testing and quality constraints (to be discussed later), the WP will ultimately be delivered to GM.

The figure above shows an example where ten (10) WPs are being development, and distributed across all the regions. The sync and merge point into GM required tight control and co-ordination, so much so that we had another team responsible for co-ordinating GM deliveries, producing what we called "GM Delivery Landing Order", just as an Air Traffic Controller co-ordinates the landing of planes (more on this later...).

The figure also highlights the use of Customer Branches that we created only when it was deemed critical to support and maintain the customer project leading up to launch. Since we were building a common Middleware product, where the source code was not branched per customer, needed did it contain customer-specific functionality or APIs, we maintained strict control over branching - and promoted a win-win argument to customers that they actually benefit by staying close to the common product code, as they will naturally inherit new features essentially for free! Once the customer launched, it becomes a prerogative to rebase to GM as soon as possible to prevent component teams from having to manage unnecessary branches. To do this we also had a process for defect fixing and delivery: Fixes would happen first on GM, then ported to branch. This ensured that GM always remained the de-facto stable tree with latest code, thus giving customers the confidence that they will have not lost any defect fixes from their branch release when merging or rebasing back to GM. This is where the auditing of defect fixes came into the CI/CM tracking.

Also shown in the figure above is the concept of FST stream as I highlighted earlier. Basically an FST is a long lead development/integration stream reserved for complex feature development that could be done in relative isolation of the main GM, for example, features such as Save-From-Review-Buffer or Progressive Download component, or Adaptive Bit-Rate Streaming Engine - could all be done off an FST and later merged with GM. As I said earlier, FSTs had to be regularly rebased with GM to maintain healthy code, preventing massive merge activities toward the end.

Overview of Component Development StreamsThe Component Tip, just like the Grand Master (GM) represents the latest production code common to all customer projects for that component. Work Package development happens on an isolated WP Development Stream, that delivers to an Integration WP Stream. When the WP is qualified as fit-for-purpose, only then does that code get delivered or merged back to tip. At each development stream, CI can be triggered to run component-level tests for Regression. Components also maintain customer branches for specific maintenance / stability fixes only. Similarly FST stream is created for long-lead development features, but always maintaining regular, frequent rebases with tip.

This is illustrated by the following graphic below:

|

| Overview of a Development Teams CM Structure |

Overview of Work Package Development & Integration

Now that we've covered the basics around the CM system and the concept of Work Packages (WPs), it's time to illustrate how we executed this, from a high level. Of course there were many situations around development/integration one encounters that calls for other solutions, but we always tried to maintain the core principles of development & integration, which can be summarized as:

- Isolated development / integration streams regardless of feature development / defects fixes

- Where defects impacted more than one component with integration touch-points, that calls for a WP

- Controlled development and delivery

- Components must pass all associated component tests

- Integration tests defined for the WP must pass, exceptions allowed depending on edge-cases and time constraints

- Deliveries to Grand Master or Component Tip tightly controlled, respecting same test criteria outlined earlier

The next picture aims to illustrate what I've described thus far. It is a rather simplified, showing the case where four WPs (identified as WP1...WP4) are planned, showing the components impacted, the number of integration test scenarios defined as acceptance criteria for each WP, along with the planned delivery order.

|

| Overview of Work Package Develpment / Integration streams |

In this example I've highlighted just five Components (from a set of eighty), and just four WPs (in reality, we had on average 30 WPs for an iteration, with an average of six-to-eight components impacted in a typical WP). Also shown is the location of each integration region, remember we were doing globally distributed development. So WP1 made up of Components A, B, D, E would be integrated and delivered from London UK, WP2 with Components A, C integrated from Paris, WP3 Components D, B, A, C from India and WP4 with Components A-E integrated and delivered from Israel. What's not shown however is that each component was also in itself distributed (which challenged integration because of regional time differences)...

Component A seems like quite a core component as it's impacted in all four Work Packages (WPs). This means that the team has to plan their work not only according their own internal team constraints, but also have to match the project's plan for the iteration, such that the delivery order is observed: WP2 delivers first, then WP3, followed by WPs 1 & 3. In parallel, Component A has got some severe quality problems in that its not reached the required branch coverage, line coverage and MISRA count - and this team has to deliver not only on the WPs planned, but also meet the quality target, for the release at the end of the iteration. There is the other challenge, that probably due to scheduling challenges, or the complexity of the work, this team has to start all WP development off on the same day. So in addition to the separate, yet parallel quality stream, the team works on the WP requirements on four separate WP development streams.

Component teams largely implemented Scrum, they maintained internal sprint backlogs that mapped to the overall project iteration plan. So Comp A would have planned in advance the strategy for doing the development, plan in code review points, sync points, etc. Note though, that it was often frowned upon to share code between WP streams if the WP hadn't delivered yet. This is so that the Integrators only tested the required features of the said WP, without stray code segments from other WPs that wouldn't be exercised by the defined integration tests. Remember that Integrators actually exercised component source code through their defined public interfaces as specified in the Work Package Design Specification (WPDS).

Component A then has to maintain careful synchronization of changes, essentially aiming to stay close to component tip to inherit latest code quality improvements, and then co-ordinating the rebase when the WP delivery happens. When Integration give the go-ahead for delivery, Comp A creates a candidate baseline, having rebased off Tip, for the WP stream. Integration then applies final testing on the candidate baselines of all components impacted, and if there are no issues, components create the release baseline (.REL) in UCM-speak, and post the delivery to Grand Master. Looking at the example shown for Comp A, when WP2 delivers to GM, this means all the code for WP2 is effectively pushed to component tip. This automatically then triggers a rebase of all child WP streams to inherit the latest and merge with tip, so WP3,4,1 will immediately rebase and continue working on their respective WP streams until it's their turn for delivery. So there is this controlled process of rebase to tip, deliver, rebase, deliver....

The same applies for Component E, although it is much more simple - since Comp E only works on delivering just two WPs, WP1 & WP4. And according to this team, WP1 starts first, followed by WP4 - the rebase and merge happens when WP1 delivers, followed by WP4 delivery.

There are many different scenarios for component development, each component presented it's own unique challenges. Remember this mentality is all about working on different streams impacting common files and common source code, for example, the same function or API might be impacted in all four work packages, so internal team co-ordination: fundamental.

To close this section on development stream, note that at each point in the delivery or even rebase point, component teams had to comply with all testing requirements, so at each point in the delivery chain, the CI system was triggered to run automated tests...

Walk through of the WP Integration Stream

The figure above shows the Integration Stream on the bottom right-hand corner, illustrating the Grand Master Stream along with the regional Local Masters, with their child WP Integration streams. It also shows, in similar vein to the component stream, the activities involved with WP delivery to Local Master, and ultimate delivery into the Grand Master (GM).

Looking at WP4, which impacts all the components, we can see that component development streams feed into the WP integration stream. What happens is the following: At the start of the iteration, which marks the end of a previous iteration where a release baseline was produced off GM, all Local Masters (LM) will have rebased to the latest GM. Once the LMs have rebased, Integrators from the various regions will have created WP Integration streams for all WPs planned for that iteration. The Integrator uses that WP stream to put an initial build of the system together, and starts working on his/her Middleware Test Scenarios. Test Scenarios are test cases implemented in source code that uses the Middleware Test Harness Framework. This framework is basically a mock or stubbed Middleware, that can be used to simulate or drive many of the Middleware APIs. For a WP, the components will have published all their API changes before hand, publish the header file in advance and provide stubbed components (APIs defined but not implemented) to the Integrator. The Integrator then codes the test scenarios, ensuring the test code is correct, works with component stubs until all components deliver into the WP stream with the implemented functionality.

The integrator does a first pass run, removes the stub components replacing them with the real components and begins testing. If during testing, Integrator uncovers issues with the components, components will deliver fixes off that components WP stream. This process is collaborative and repeats until the Integrator is happy that the core test scenarios are passing. In parallel, the components have run their own component unit testing and code quality tests, and are preparing for the call to candidates from the Integrator. The Integrator runs Middleware Core Sanity and other critical tests, then calls for candidates from the components. Depending on the severity of the WP, the Integrator might decide to run a comprehensive suite of tests off the CI system, leave the testing overnight or over the weekend to track major regressions - if all is well and good, the components deliver releases to the WP stream (this is usually the tip of the component).

Some co-ordination is required on the part of the Integrator. Remember there are multiple WPs being delivered from various Integration locations. Nearing completion of his/her WP, the Integrator broadcasts to the world, makes an announcement that "Hey, my WP4 is ready for delivery, I need a slot to push my delivery to Master". This then alerts the global product integration team, just like an air traffic controller would look out for other planes wishing to land, of other WPs in the delivery queue, takes a note of the components impacted, then allocates a slot for the WP in the delivery queue.

If there were other projects with higher priority that preempted WP4 delivery, then the integrator has to wait, and worst case has to rebase, re-run tests, call for candidate releases again, re-run regression tests, and then finally deliver to Master.

Overview of WP Delivery Order process

A discussion on Distributed Development & Integration cannot be complete without discussing the resulting Delivery challenges. I touched on this subject above, in terms of how the four example work packages would deliver back to GM based on the Delivery Order for the iteration. I also mentioned that this activity mapped to Air Traffic Controller, maintaining strict control of the runways landing order.

During the early stages of the programme where it was just BSkyB and SkyD, the number of Work Packages (WPs) delivering within the iteration (recall we had 6 weeks iteration, 5 weeks reserved for development & integration, 6th week reserved for stability bug fixes, WPs were not allowed in the 6th week), would average around 25 WPs. At peak times, especially when we had a strong drive to reach the feature complete milestone, we'd have around 50 WPs to deliver, I recall a record of 12 or 15 WPs delivered on just one day, with hardly any regression!

Of course, when we were pushed, there were times that called for management intervention to just go ahead and deliver now, and sort regressions out later: Just get the WP in by the 5th week, use the weekend and the 6th week to fix regressions. This case was the exception, not the norm.

When BSkyB launched, we had a backlog of other customer projects with the same urgency as BSkyB, if not more urgent - and with higher expectations of delivering some complex functionality within a short, really short timeline. By the second half of 2010 we had at least 3 major customer projects being developed simultaneously, all off the common Grand Master codebase - this called for extreme control of GM deliveries.

We had on average 45 WPs planned for each iteration. We had introduced more components to the middleware stack to support new features. We also introduced new hardware platforms for each customer (will cover this under a later CI section)...

To manage the planning of GM deliveries we had to create what we called the GM Delivery Landing Order. One of my tasks on the Product Management team was to ensure the GM Delivery plan was created at the start of the iteration. Customer project managers would feed their Iteration WP plans to me, highlighting all the components impacted, and estimated Integration complete date, along with Integration location. Collecting all these plans together, we then created a global delivery schedule. Of course there were obvious clashes in priorities between projects, between WPs in projects, Components within projects and cross project interdependencies that all had to be ironed out before publishing the GM Delivery plan. The aim of the GM delivery plan was to ensure a timetable was baselined and published to the global development and integration teams. This timetable would show which WPs belonging to which projects were delivering on which day, from which integration location, and the estimated landing order. Once the iteration started, I had to maintain regular updates to the plan as most of the time, WPs would slip from one week to another, or brought forward, so the GM delivery plan had to sync up with latest changes.

We had a dedicated team of Integration Engineers for the Product Team that co-ordinated and quality controlled the deliveries to GM. They relied heavily on this published GM delivery schedule as they used it to drive the landing order for WPs on the day. The GM Delivery Lead would send out a heartbeat almost every hour, or as soon as WPs were delivered to refresh the latest status of the landing order.

I could spend a good few pages talking about the intricacies of controlling deliveries - but by now you should get the drift. Take a look at the following graphic that summarizes most of the above.

|

| Grand Master WP Deliveries Dashboard (For One Sample Iteration) |

|

| GM Timetable: WPs from Each LM, Timeline for whole Iteration |