A couple of years ago, I wrote an exhaustive piece about how software and systems projects need to focus on effective quality management across the board, covering in some fair amount of detail the various aspects to quality management, drawing on the experiences from a past project of mine as a case study.

In that paper, I closed by touching on how we, the management team, used as an intervention to improve quality of releases, by injecting Root Cause Analysis into the project stream, by embedding a Quality Manager as part of the team (~250 people, 20 odd development teams), whose role was to monitor various aspects of quality, covering escaped defects, quality of defect information, component code quality, build & release process quality, etc. This manager met with component teams on a weekly basis, instigating interventions such as code reviews, mandatory fields to input information into the defect tracking (for better metrics / data mining), proposing static analysis tools, and so on. The result of this intervention saw a marked improvement across the board, right from individual component quality to final system release quality. This was a full time role, the person was deeply technical, very experienced engineer, and had to have the soft skills to deal with multiple personalities and cultures. Because we were distributed across UK (2 sites), Israel, France, India (2 sites), we had in-country quality champions feeding into the quality manager.

In the above-mentioned project, for example, we ended up with something like this (at a point in the project, Release 19, not the end), the snapshot shows progress over 11 System Releases, capturing 33 weeks of data, where each release was time boxed to a three week cycle:

The scenario is a fairly young development team, passionate about agile / scrum, that are hard pressed to develop an application against very challenging timelines. In doing so, some of the core principles of Extreme Programming (XP) haven't been implemented, such as unit testing and continuous integration. Instead, the team consists of a handful of developers, and some QA/Testing people. Before you shout blasphemy, that agile / lean works with a cross-functional, fully self-organising team, where roles/responsibilities are fully interchangeable - the reality is that a lot of teams new on the path to agile / scrum, generally start with embedding under one team, a separate Dev team & QA/Test team, however, this complement is still considered the "Dev Team", and within the Sprint / Iteration itself, there is time allocated for coding, followed by testing.

The testing is done manually, short of not having unit tests & continuous integration in place. Testing happens almost daily, or as frequent as possible - when a feature is completed, the testing team tests, and feeds back to development. Development fix issues that can be fixed within the sprint. All issues not fixed within the sprint is parked and added to the backlog for the next sprint. The test team then make the call whether the component is fit for release into the wider system integration team. Sometimes builds are rejected, and the pipeline downstream to SI is halted. This is a reality of some teams that are on the path to growing and changing their agile mindset.

So how does this test team force improvements back to development? One way is to start looking for root causes, gather data, get the metrics, process metrics and start reporting. Use the retrospectives as the platform to highlight the quality issues. A lean mindset, be it agile or scrum, involves a lot of process inspection and adaption, with feedback loops back into the team to measure and track improvements. Inspect and Adapt. Put this into action - and see how it goes.

Lets see how we can make sense of this, this is based on a recent coaching chat I had with the said component test lead:

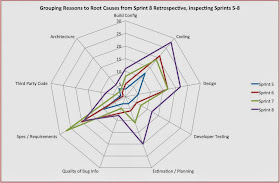

This picture is rather busy, and will likely trigger a good, productive conversation. These are all the reasons the developers used to explain why the bug happened. If they ask for details, the test lead can provide the data, that would track the issue, the date/time, the developer who responded, etc. A simple list can track this, you don't have to use a complicated bug tracking tool to do this. The picture still shows it is clear there is a quality problem that has been growing since Sprint 5, and is increasing.

Next step is to process the reasons by grouping into some possible causes for each of the reasons presented. Root cause identification is an iterative process, each cause in itself can be drilled down further and further into nailing down the ultimate culprit. By way of illustration, below is just the first level of the RCA, that is a result of the development team grouping reasons into possible causes:

With this in hand, you have another go and presenting a much more clearer visual:

This view is so much more clearer, you can see the root causes gravitating towards Coding in general, as well as highlighting areas for improvement around Requirements, Planning, Design and to some extent Developer testing.

In the retro, the team will have agreed to tackle some burning areas, say Coding in particular. They agree on interventions, make them clearly visible on a board or any other information radiator, and agree to track more closely these improvements…

Jump ahead to Sprint 11, and the picture could look like this:

Sprint 11 (the purple shaded area closest to zero, inner most region) has seen a remarkable improvement across the board, although the team still haven't yet quite cracked the "Developer Testing" element, which could be the next area to focus on. Coding issues have come down which maybe a result of improving team's internal coding standards, reviews, checkpoints, etc. Estimation/Planning seems to be better under control, which may allude to the team being in better control of their backlog, or using other measurements to better estimate…

Inspection and adaption, measurement and metrics, constant feedback loops to continuously improve quality, pushing back to improving quality on upstream components - is a fundamental mindset of any lean / agile endeavour. Young teams, without proper coaching and guidance, fall into the trap of misunderstanding core principles, failing to take time to consider what process controls to put in place. An agile team must own some bit of structure, it is not a free-for-all, ad-hoc, willy nilly excuse for poor discipline and management…

To conclude, agile teams must continuously inspect and adapt. This includes taking time out to measure, analyse and look for probable causes of issues, quality, process bottlenecks, etc. Using Root Cause Analysis (RCA) is a useful, proven method for improvements. I've not specifically dealt with the technique for RCA in detail, only illustrated how it could be done on a simple level with the development team themselves. If you would like to dig deeper into this, I'd advise you seek a skilled and experienced person in facilitating discovery sessions, and driving through the process for RCA...

In that paper, I closed by touching on how we, the management team, used as an intervention to improve quality of releases, by injecting Root Cause Analysis into the project stream, by embedding a Quality Manager as part of the team (~250 people, 20 odd development teams), whose role was to monitor various aspects of quality, covering escaped defects, quality of defect information, component code quality, build & release process quality, etc. This manager met with component teams on a weekly basis, instigating interventions such as code reviews, mandatory fields to input information into the defect tracking (for better metrics / data mining), proposing static analysis tools, and so on. The result of this intervention saw a marked improvement across the board, right from individual component quality to final system release quality. This was a full time role, the person was deeply technical, very experienced engineer, and had to have the soft skills to deal with multiple personalities and cultures. Because we were distributed across UK (2 sites), Israel, France, India (2 sites), we had in-country quality champions feeding into the quality manager.

In the above-mentioned project, for example, we ended up with something like this (at a point in the project, Release 19, not the end), the snapshot shows progress over 11 System Releases, capturing 33 weeks of data, where each release was time boxed to a three week cycle:

Starting off, in order to do a reasonable job of RCA, we had to trust the quality of data coming in. Our source was the quality of defect information captured in the defect tracking tool, Clearquest. The stacked bar graph on the top of this picture shows how we measured the quality of defect info: Dreadful, Poor, Partial & Good. The goal was to achieve Good, Reliable defect information - over time, through lots of communication and other interventions, we saw an improvement such that there was barely any track of Partial / Poor / Dreadful defects. The radial graph shown in the bottom, measures the trend of root causes per release, and movement of causes from one release to another. At the end of Release 19, we still had some "Design" causes to get under control, but we'd seen a substantial improvement in the level of "Coding", "Architecture" & "Unknown" issues. For each of the causes, the team implemented interventions, as described here.In this post, I drill down into a component development team that's only just starting with agile / scrum, to show how the team can adopt similar Root Cause Analysis (RCA) principles in managing the quality of their own component delivery, by leveraging off the Scrum Retrospective as a platform to implement an Inspect & Adapt quality improvement feedback loop.

The scenario is a fairly young development team, passionate about agile / scrum, that are hard pressed to develop an application against very challenging timelines. In doing so, some of the core principles of Extreme Programming (XP) haven't been implemented, such as unit testing and continuous integration. Instead, the team consists of a handful of developers, and some QA/Testing people. Before you shout blasphemy, that agile / lean works with a cross-functional, fully self-organising team, where roles/responsibilities are fully interchangeable - the reality is that a lot of teams new on the path to agile / scrum, generally start with embedding under one team, a separate Dev team & QA/Test team, however, this complement is still considered the "Dev Team", and within the Sprint / Iteration itself, there is time allocated for coding, followed by testing.

The testing is done manually, short of not having unit tests & continuous integration in place. Testing happens almost daily, or as frequent as possible - when a feature is completed, the testing team tests, and feeds back to development. Development fix issues that can be fixed within the sprint. All issues not fixed within the sprint is parked and added to the backlog for the next sprint. The test team then make the call whether the component is fit for release into the wider system integration team. Sometimes builds are rejected, and the pipeline downstream to SI is halted. This is a reality of some teams that are on the path to growing and changing their agile mindset.

So how does this test team force improvements back to development? One way is to start looking for root causes, gather data, get the metrics, process metrics and start reporting. Use the retrospectives as the platform to highlight the quality issues. A lean mindset, be it agile or scrum, involves a lot of process inspection and adaption, with feedback loops back into the team to measure and track improvements. Inspect and Adapt. Put this into action - and see how it goes.

Lets see how we can make sense of this, this is based on a recent coaching chat I had with the said component test lead:

For every issue picked up during testing, start collecting data by asking the developers for reasons behind the bug. Start collecting the feedback, don't need to make sense of it immediately. Wait a few sprints to get the data, and then trigger at the appropriate time, in a retrospective what your team has found so far. Show the data using some kind of visualisation that highlights the problem areas. Engage in a discussion with the dev team, using the Scrum Master or Product Owner as Facilitator. Try to get to the bottom of the root causes for the issues. Identify some intervention or improvement plan, then track progress. Subsequent progress measurements can be done every sprint, or you could wait for a point when enough inspection data is available to produce the next retrospective.Lets imagine the test lead has started this activity from Sprint 5, and is ready to kickstart the discussion at the Sprint 8 retrospective. The picture to frame the initial discussion could look like this:

This picture is rather busy, and will likely trigger a good, productive conversation. These are all the reasons the developers used to explain why the bug happened. If they ask for details, the test lead can provide the data, that would track the issue, the date/time, the developer who responded, etc. A simple list can track this, you don't have to use a complicated bug tracking tool to do this. The picture still shows it is clear there is a quality problem that has been growing since Sprint 5, and is increasing.

Next step is to process the reasons by grouping into some possible causes for each of the reasons presented. Root cause identification is an iterative process, each cause in itself can be drilled down further and further into nailing down the ultimate culprit. By way of illustration, below is just the first level of the RCA, that is a result of the development team grouping reasons into possible causes:

With this in hand, you have another go and presenting a much more clearer visual:

This view is so much more clearer, you can see the root causes gravitating towards Coding in general, as well as highlighting areas for improvement around Requirements, Planning, Design and to some extent Developer testing.

In the retro, the team will have agreed to tackle some burning areas, say Coding in particular. They agree on interventions, make them clearly visible on a board or any other information radiator, and agree to track more closely these improvements…

Jump ahead to Sprint 11, and the picture could look like this:

Sprint 11 (the purple shaded area closest to zero, inner most region) has seen a remarkable improvement across the board, although the team still haven't yet quite cracked the "Developer Testing" element, which could be the next area to focus on. Coding issues have come down which maybe a result of improving team's internal coding standards, reviews, checkpoints, etc. Estimation/Planning seems to be better under control, which may allude to the team being in better control of their backlog, or using other measurements to better estimate…

Inspection and adaption, measurement and metrics, constant feedback loops to continuously improve quality, pushing back to improving quality on upstream components - is a fundamental mindset of any lean / agile endeavour. Young teams, without proper coaching and guidance, fall into the trap of misunderstanding core principles, failing to take time to consider what process controls to put in place. An agile team must own some bit of structure, it is not a free-for-all, ad-hoc, willy nilly excuse for poor discipline and management…

To conclude, agile teams must continuously inspect and adapt. This includes taking time out to measure, analyse and look for probable causes of issues, quality, process bottlenecks, etc. Using Root Cause Analysis (RCA) is a useful, proven method for improvements. I've not specifically dealt with the technique for RCA in detail, only illustrated how it could be done on a simple level with the development team themselves. If you would like to dig deeper into this, I'd advise you seek a skilled and experienced person in facilitating discovery sessions, and driving through the process for RCA...

I like the way that you have collected data to show the impact of retrospectives on the issues that you discovered using RCA. It's clear from the data that the team addresses the major issues.

ReplyDeleteI've worked with agile teams to estimate and measure the quality of the software that they deliver, see http://www.benlinders.com/2012/steering-product-quality-in-agile-teams/. I've combined it with Root Cause Analysis to adress re-occuring and major quality issues, which showed that by focusing on those issues the team imroved and didn't make similar mistakes.